Silicon Valley is a hub of emerging technology, with cutting-edge companies such as Apple, Facebook, and Google calling the California region home. Among these tech giants is Tesla, which promises a future where the streets are teeming with fully electric, autonomous vehicles. One roadblock to this vision, however, is Tesla’s recent admission that its Autopilot feature was engaged during a deadly crash involving one of its vehicles. This incident marks the second so-called ‘self-driving car’ fatality in less than a week, casting doubt on whether what industry advocates have touted as lifesaving technology is truly roadworthy.

A Fiery Wreck in Silicon Valley

On March 23, 2018, at approximately 9:27 a.m., Wei Huang, a 38-year-old Apple engineer, was traveling in a Tesla Model X sport utility vehicle on Highway 101 in Mountain View, CA, part of the state’s Silicon Valley high-tech center. The Tesla then collided with a median barrier, causing it to burst into flames. The vehicle subsequently landed in the second left-most lane of Highway 101, where it was struck by two other vehicles.

Huang was taken to nearby Stanford University Medical Center, where he later died from major injuries sustained in the crash.

Tesla’s Enhanced Autopilot Feature

Like many other Tesla vehicles, Huang’s Model X was equipped with the company’s Enhanced Autopilot feature, which allows the vehicle to move in “semi-autonomous” mode. When Autopilot is deployed, the company’s website claims that the vehicle “will match speed to traffic conditions, keep within a lane, automatically change lanes without requiring driver input, transition from one freeway to another, exit the freeway when your destination is near, self-park when near a parking spot, and be summoned to and from your garage.”

Despite its ability to perform the above, admittedly sophisticated actions, Autopilot still requires significant driver intervention on the road, meaning that the Tesla is not a true self-driving car. On an automation scale from 0 to 5, a self-driving car would be characterized as having achieved Level 5, or “Full Automation,” meaning the vehicle could drive itself anytime, anywhere, under any conditions, without any human intervention. In a Level 5 vehicle, human beings would essentially be cargo.

Automotive industry analysts argue that even a high-end Tesla, such as the Model X, at best offers Level 3 – “Conditional Automation.” Nevertheless, Tesla insists that “all…vehicles produced in our factory…have the hardware needed for full self-driving capability at a safety level substantially greater than that of a human driver,” even if the relevant software is not yet available.

Two Statements from Tesla

The company seemed to downplay its vehicles’ self-driving capabilities, however, in the wake of the recent death involving its vehicle. On March 27, four days after the fatal crash, Tesla issued a somewhat hesitant press release, one that stated that the company had not yet been able to review the vehicle’s logs, and could therefore not definitively determine the cause of the crash, or whether Autopilot had been engaged at the time. Tesla also touted Autopilot’s safety statistics, while cautioning that they do not mean that the technology “perfectly prevents all accidents — such a standard would be impossible — it simply makes them less likely to occur.”

On March 30, three days after its initial public statement, Tesla issued another, in which it announced that Huang’s “Autopilot was engaged with the adaptive cruise control follow-distance set to minimum.”

Still, the company’s press release emphasized Huang’s own responsibility for the crash, noting that he “had received several visual and one audible hands-on warning earlier in the drive and the driver’s hands were not detected on the wheel for six seconds prior to the collision.” Tesla further stated that “the driver had about five seconds and 150 meters of unobstructed view of the concrete divider with the crushed crash attenuator, but the vehicle logs show that no action was taken.”

Additionally, Tesla claimed that the damage to the vehicle was so severe because “the crash attenuator, a highway safety barrier which is designed to reduce the impact into a concrete lane divider, had been crushed in a prior accident without being replaced,” while reiterating its position that Tesla Autopilot “unequivocally makes the world safer for the vehicle occupant, pedestrians, and cyclists.”

Government, Deceased’s Family Highly Critical

Placing the blame for the fatal collision squarely on Huang’s shoulders seemingly contradicts comments made by his brother Will, who told a local television reporter that Huang had complained “7-10 times the car would swivel toward the same exact barrier during Autopilot” and that he “took it into the dealership addressing the issue, but they couldn’t duplicate it there.”

Tesla said that the company did not have records of Huang’s complaint and insisted that he only brought up issues with the navigation system.

For its part, the National Transportation Safety Board (NTSB), the government agency tasked with investigating the incident, was “unhappy with the release of investigative information by Tesla.” According to spokesman Chris O’Neil, the NTSB expects all parties involved in its investigations to inform the agency of any release prior to making information public.

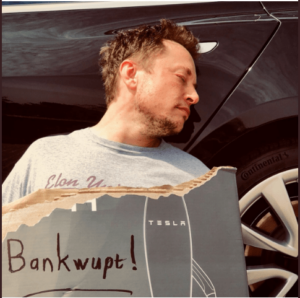

Similarly irritated with the embattled auto manufacturer are its investors. Tesla’s stock price has been plummeting of late over the company’s failure to keep up with its own production timelines. Recent financial projections inspired a perhaps ill-advised April Fool’s Day “tweet” by Tesla CEO Elon Musk, “joking” that “Despite intense efforts to raise money, including a last-ditch mass sale of Easter Eggs, we are sad to report that Tesla has gone completely and totally bankrupt.” Musk posted the tweet a mere two days after the company’s latest statement about Wei Huang’s demise.

Tesla Goes Bankrupt

Palo Alto, California, April 1, 2018 — Despite intense efforts to raise money, including a last-ditch mass sale of Easter Eggs, we are sad to report that Tesla has gone completely and totally bankrupt. So bankrupt, you can't believe it.— Elon Musk (@elonmusk) April 1, 2018

Similar Self-Driving Car Crash Deaths

The failure of Tesla’s Autopilot feature to prevent the fatal crash is likely to add to the company’s current woes – especially since similar incidents have happened before.

On January 20, 2016, in Hubei Province, China, Gao Yuning was killed when he crashed into the back of a road-sweeping truck while operating a Tesla on Autopilot. This was the first known fatal crash involving a vehicle traveling in autonomous mode.

On May 7, 2016, several months after that incident, Joshua Brown, the operator of a Tesla Model S traveling on Autopilot, was killed in Williston, FL when his vehicle failed to slow down or take evasive action before crashing into a semi-trailer that was making a left-hand turn.

According to a subsequent investigation conducted by Tesla, Brown’s crash occurred because “neither Autopilot nor the driver noticed the white side of the tractor-trailer against a brightly lit sky, so the brake was not applied.”

Uber Forced to Shut Down Program

Perhaps offering further rebuke to evangelists of self-driving car technology, Huang’s death came less than a week after another high-profile fatality involving an autonomous vehicle produced by a Silicon Valley juggernaut.

On March 18, a Volvo XC90 SUV outfitted with Uber’s sensing system was heading towards a busy intersection in Tempe, AZ at a reported 38 miles per hour. The Volvo was part of a test of self-driving vehicles being conducted by Uber, the ride-sharing pioneer. While there was a safety driver behind the wheel, the vehicle was traveling in self-driving mode at the time. The self-driving car then struck and killed Elaine Herzberg, a woman who had been crossing the street with her bicycle.

The incident is thought to be the first fatal pedestrian crash involving an autonomous vehicle. The Uber apparently failed to make an evasive maneuver or decelerate to avoid hitting the woman, prompting two experts in autonomous vehicle technology to conclude that the self-driving car itself may have been at fault.

Following the incident, Uber halted all road-testing of autonomous vehicles in the Phoenix area, Pittsburgh, San Francisco, and Toronto. Arizona Gov. Doug Ducey subsequently suspended the company’s ability to test self-driving cars on public roads in the state.

<< BACK TO BLOG POSTS